Background

In 2009 the first edition of the SCImago IBER was published as an annual classification for universities’ sector institutions of countries in the Ibero-American region, with a minimum scientific output of 1 document in Scopus indexed journals in an established period of 5 years.

Until 2021, the SCImago IBER was conceived as a league table whose purpose was to disseminate information on the institutions’ performance in research, innovation and societal impact, characterizing them in terms of their scientific, economic and social contribution. To this end, the publication of the ranking was carried out through a report in which the ranking of the universities was established according to the number of documents published and information was offered on the different indicators for each institution.

In the diverse editions, in addition to the table of institutions with the different indicators, an analysis of the universities sector in the region is offered. In the last two editions, the analysis of the most productive countries was deepened, even detailing the behavior according to the universities’ type (public or private), scientific output in national journals versus scientific output in national journals or co-citation analysis by subject areas, among others[1].

Updates for 2024

The SCImago IBER 2024 edition includes 3 new indicators that reflect more specifically the societal impact achieved by an institution, region or country: among them, the generation of new knowledge related to the Sustainable Development Goals defined by the United Nations, the participation of women in research processes and the use of the results obtained in the creation or improvement of public policies. The definition of each indicator can be consulted in the indicators section.

What possibilities does the SCImago IBER interface offer?

At the top of the map it is possible to access the information by country, regions (at the national level) and institutions. In each case, a side menu is displayed on the left side to access filters that can also be used simultaneously: analysis period (always five-year period); publication language; sectors; subject areas or SciELO publications. At the regional level, the country filter is particularly useful as it allows filtering the regions of the selected country; and at the institutional level, the country, region or SIR Institutions filters are available too. At the same time, in that menu, there is also the option of narrowing the search by establishing a minimum scientific output value to facilitate the analysis of institutions, regions or countries with similar characteristics.

On the other hand, on the right side it is possible to zoom in or out, activate the full-screen view and directly select an area of interest on the map (once selected you can also zoom that area or remove the selection).

Graphically, each bubble on the map represents an institution, a region or a country depending on the case, whose size and color are determined by the behavior of the unit of analysis in two previously defined indicators. By default, the size is determined by the number of published documents (output) and the color by the normalized impact. However, at the bottom right it is possible to choose different options among the 16 available indicators to define the size and color of the bubbles in the graphic display.

In all cases, once the unit of analysis and the corresponding filters have been defined on the map, a table is displayed at the bottom of the screen with the selected countries, regions or institutions and the value of each indicator. The list is organized according to the output by default, but it is possible to sort the different units of analysis according to an specific indicator by pointing it out in the header of the table. From the same list it is possible to access the personalized profile of each institution, region or country by clicking on its name. Then, a new window opens showing the behavior of all the indicators, the increase or decrease in each of them in relation to the previous five-year period and, in the particular case of the institutions, it also includes the map of the collaboration network.

Methodology

As it occurs with the SIR-SCImago Institutions Rankings (https://www.scimagoir.com/) and the different products generated by SCImago Research Group, the indicators used are provided by renowned sources: in this case Scopus, Unpaywall, Patstat, PlumX and Mendeley; have a solid methodological support and have been widely discussed and accepted within the international scientific community (van Raan, 2004, Moed, 2005; 2015, Wilsdon et al., 2015, Waltman, 2016, Bornmann, 2017; Guerrero-Bote; Sánchez-Jiménez; Moya-Anegón, 2019; Bornmann et al., 2020; Guerrero-Bote; Moed; Moya-Anegón., 2021).

At the same time, for the calculation and generation of the different indicators, SCImago has developed a methodology that requires an exhaustive process of standardization of the information that implies:

- The definition and unique identification of institutions, since there are multiple drawbacks in the standardization of institutions due to the ambiguity of their names in the institutional affiliation. Typical activities for this task include Institution’s merger or segregation and name changes.

- The attribution of publications and citations to each institution. The institutional affiliation of every author is taken into account, assigning multiple affiliations as appropriate and identifying multiple documents with the same DOI and/or title.

- The grouping of institutions by sector. The institutions have been grouped into the sectors of Universities, Government, Health, Companies and Non-profit.

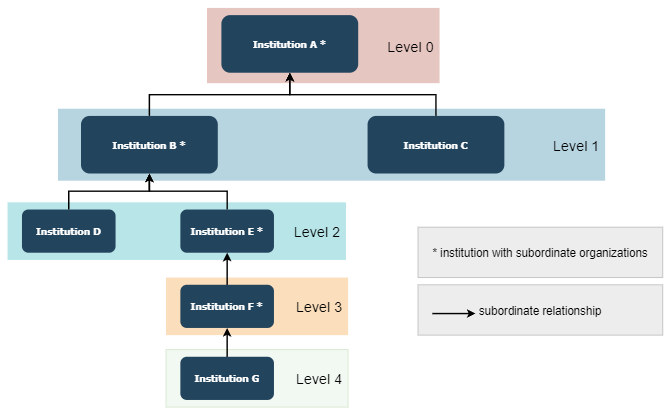

- The hierarchical grouping of institutions. Those institutions that have subordinate structures (marked with asterisk in the tables) and have been grouped to reflect the scientific output capacity of the whole.

Depending on their position in the hierarchy, institutions can be classified by levels from 0 (highest level for parent institutions) to 4 (lowest level). See the example below:

For the ranking purposes, the calculation is generated every year from the results obtained over a period of five year ending the last year before the edition of the ranking. For example, for the SIR Iber 2024, the results shown are those of the five-year period 2019-2023. As already mentioned, since 2023, the SIR Iber includes Ibero-American institutions from all sectors that have published at least one document in Scopus journals in the analysis period[2].

Indicators and Measurement Factors

The SCImago Iber contains information on 16 indicators that reflect the scientific activity in 3 different areas: research, innovation and societal impact that constitute a comprehensive overview at the performance of the research activity of an institution, a region or a country, observing the capacity of scientific output, its link with the productive sector and its dissemination and strengthening through good practices in web-based scientific communication.

Research

The indicators corresponding to the research factor seek to reflect the institutional capacity to generate scientific products and disseminate them through recognized scientific communication channels. To this end, the source of information is the scientific output in Scopus indexed journals, taking into account that this type of publications implies rigorous standardization, edition and evaluation processes that accredit the validity of the published results. (Bordons; Fernández; Gómez, 2002; Van-Raan, 2004; Moed, 2005; 2009; Waltman et al., 2011; Codina-Canet; Olmeda-Gómez; Perianes-Rodríguez, 2013; Waltman, 2016).

In the case of the impact indicators, the central axis is the citation, understanding that the citation itself establishes necessary links between people, ideas, journals and institutions to constitute a field of study that can be quantitatively analyzed. It also implies the recognition of the usefulness of the cited document and provides a time link between the publication of the references and the citations made (Bornmann, Mutz, Neuhaus, & Daniel, 2008, Moed, 2009, Mingers & Leydesdorff, 2015., Gorraiz et al., 2021).

Accordingly, although the number of documents produced is taken into account, part of the riches of the SIR Iber is the information related to the institutional, regional or national contribution of these documents in the global scientific field, in relation to the excellence, leadership and normalized impact. At the same time, indicators such as the number of publications in open access, output in journals not edited by an analysis unit or the ability to develop editorial management activities provide valuable information for different types of analysis.

All documents types in SCOPUS are considered except Retracted, Erratum and Preprint.

Indicators

Excellence with Leadership

Total number of documents published by institution, region or country, whose corresponding author belongs to that unit of analysis and is also within the 10% of the most cited documents in their subject category. It reflects the institution's ability to lead high-quality research (Moya-Anegón et al., 2013; Bornmann et al., 2014). It is a size-dependent indicator.

Normalized Impact

The normalized impact is calculated on institution’s leadership output of the institution, region or country, according to the methodology Item oriented field normalized citation score average of the Karolinska Institutet[3]. This indicator reflects the impact of the knowledge generated by an institution within the international scientific community.

The normalization of the citation values is done at the individual level for each article. The values expressed in decimal numbers take as the central point the world average impact (1). Therefore, if an institution has a NI of 0.8, it means that its scientific output is cited 20% below the world average. By contrast, if an institution has an NI of 1.3, it means that its output is cited 30% above the citation world average (Rehn & Kronman, 2008; González-Pereira, Guerrero-Bote, & Moya-Anegón, 2010; Guerrero-Bote & Moya-Anegón, 2012). It is a size-independent indicator.

Output

Total number of documents published by an institution, region or country in Scopus indexed journals. This indicator shows the ability of an institution to carry out research that allows the generation of scientific output (Romo-Fernández et al., 2011; OCDE; SCImago Research Group,2015). It is a size-dependent indicator.

Scientific Talent Pool

Number of different authors from the same unit of analysis who have participated in the total number of documents published. It reflects the number of the active researchers available and, consequently, the size of its workforce. It is a size-dependent indicator.

Scientific Leadership

Total documents published by an institution, region or country where the institutional affiliation of the main researcher corresponds to the unit of analysis. The corresponding author of the published document is considered as the main researcher and reflects the ability of an institution to lead research projects (Moya-Anegón, 2012). It is a size-dependent indicator.

International Collaboration

Total number of documents of an institution, region or country where the institutional affiliation of the authors corresponds to different institutions and at least one of them is from a different country. This indicator allows knowing the capacity of an institution to create scientific collaboration networks (Guerrero-Bote, Olmeda-Gómez, & Moya-Anegón, 2013; Lancho-Barrantes, Guerrero-Bote, & Moya-Anegón, 2013; Chinchilla-Rodríguez et al., 2012). It is a size-dependent indicator.

High Quality Publications

Total number of documents of an institution, region or country published in journals that rank in the top 25% of each subject category (Q1) according to the indicator established in Scimago Journal Rank (Miguel, Chinchilla-Rodriguez, & Moya-Anegón, 2011). It reflects the institutional capacity to achieve a high expected level of impact. It is a size-dependent indicator.

Excellence

The scientific output of an institution that is included among the 10% most cited documents in their respective scientific field. It is a measure of the high-quality performance of institutions, regions or countries (Bornmann; Moya-Anegón; Leydesdorff, 2012; Bornmann et al., 2014). It is a size-dependent indicator.

Open Access

Percentage of documents published in Open Access journals or indexed in Unpaywall database. It is a size-independent indicator.

Not Own Journals

Number of documents NOT published in journals edited by the institution itself. It is a size-dependent indicator.

Own Journals

Number of journals edited and published by the institution. It is a size-dependent indicator.

Innovation

The innovation indicators establish the capacity of institutions, regions or countries to generate or contribute to the development of patents. Both the number of patents applications and the number and percentage of citations of document published by an institution are considered in registered patent application documents. In this case the source used for the calculation of the indicators of this factor is PATSTAT database[4].

Indicators

Innovative Knowledge

Number of publications of an institution that are cited in patents. This indicator demonstrates the capacity of the institution to generate knowledge that contributes to the creation of new technologies and inventions, so it is not only likely to have commercial value, but it can also generate a short-term societal impact (Wilsdon, et al., 2015; Moya-Anegón; Chinchilla-Rodríguez, 2015). It is a size-dependent indicator.

Patents

Number of patents applications (simple families). This indicator shows the institution's ability to appropriate knowledge and generate new technologies or inventions. It is a size-dependent indicator.

Technological Impact

Percentage of an institution's publications cited in patents. This percentage is calculated by considering only the total number of publications in the areas cited in patents. These areas are: Agricultural and Biological Sciences; Biochemistry, Genetics and Molecular Biology; Chemical Engineering; Chemistry; Computer Science; Earth and Planetary Sciences; Energy; Engineering; Environmental Science; Health Professions; Immunology and Microbiology; Materials Science; Mathematics; Medicine; Multidisciplinary; Neuroscience; Nursing; Pharmacology, Toxicology and Pharmaceutics; Physics and Astronomy; Social Sciences; Veterinary. It is a size-independent indicator.

Societal impact

The indicators related to the societal impact show the web publishing schemes that contribute to increase the visibility of the scientific output and institutional reputation. Since 2019, the Societal Impact factor also has an indicator related to alternative metrics. The sources used for the calculation of indicators of this factor are PlumX Metrics [5], Mendeley[6]. Exceptionally and taking into account its nature, the last year has been considered as the temporary window. It is a size-dependent indicator.

Indicators

PlumX Metrics

Number of documents that have at least one mention in PlumX Metrics considering mentions in Twitter, Facebook, blogs (Reddit, Slideshare, Vimeo or YouTube).

Mendeley

Number of documents that have at least one reader (single-user) in Mendeley.

Impact in public policy - Overton

Number of documents of the institution that have been cited in policy documents according to the Overton database. Size-dependent. Added in the 2024 edition.

Female Scientific Talent Pool

Number of different female authors of scientific papers from an institution. Size-dependent. Added in the 2024 edition.

Sustainable Development Goals

Number of documents related to the Sustainable Development Goals defined by the United Nations. Data available as of 2018 (included from period 2018-2022 onwards). Size-dependent. Added in the 2024 edition.

Bibliography

Bordons, María; Fernández, María-Teresa; Gómez-Caridad, Isabel (2002). “Advantages and limitations in the use of impact factor measures for the assessment of research performance”. Scientometrics, v. 53, n. 2, pp. 195-206. https://doi.org/10.1023/A:1014800407876

Bornmann, Lutz; Gralka Sabine; Moya Anegón, Félix; Wohlrabe, Klaus (2020). “Efficiency of Universities and Research-Focused Institutions Worldwide: An Empirical DEA Investigation Based on Institutional Publication Numbers and Estimated Academic Staff Numbers”. CESifo Working Paper No. 8157 2020.

Bornmann, Lutz (2017). “Measuring impact in research evaluations: a thorough discussion of methods for, effects of and problems with impact measurements”. Higher education, v. 73, n. 5, pp. 775-787. https://doi.org/10.1007/s10734-016-9995-x

Bornmann, Lutz; Stefaner, Moritz; De-Moya-Anegón, Félix; Mutz, Rüdiger (2014). Ranking and mapping of universities and research-focused institutions worldwide based on highly-cited papers. Online information review, v. 38, n. 1, pp. 43-58. https://doi.org/10.1108/OIR-12-2012-0214

Bornmann, Lutz; De-Moya-Anegón, Félix; Leydesdorff, Loet (2012). “The new excellence indicator in the World Report of the SCImago Institutions Rankings 2011”. Journal of informetrics, v. 6, n. 2, pp.333-335. https://doi.org/10.1016/j.joi.2011.11.006

Bornmann , L.utz, Mutz , Rudiger., Neuhaus , Christoph., & Daniel, Hans. (2008). Citation counts for research evaluation: standards of good practice for analyzing bibliometric data and presenting and interpreting results. Ethics Sci Environ Polit, 8, 93-102. doi:https://doi.org/10.3354/esep00084

Chinchilla-Rodríguez, Zaida; Benavent-Pérez, María; Moya-Anegón, Félix; Miguel, Sandra (2012). “International collaboration in medical research in Latin America and the Caribbean (2003-2007)”. Journal of the American Society for Information Science and Technology, v. 63, n. 11, pp. 2223-2238. https://doi.org/10.1002/asi.22669

Codina-Canet, María-Adelina; Olmeda-Gómez, Carlos; Perianes-Rodríguez, Antonio (2013). “Análisis de la producción científica y de la especialización temática de la Universidad Politécnica de Valencia. Scopus (2003-2010)”. Revista española de documentación científica, v. 36, n. 3, e019. https://doi.org/10.3989/redc.2013.3.942

González-Pereira, Borja; Guerrero-Bote, Vicente P.; Moya-Anegón, Félix (2010). “A new approach to the metric of journals’ scientific prestige: The SJR indicator”. Journal of informetrics, v. 4, n. 3, pp. 379-391. https://doi.org/10.1016/j.joi.2010.03.002

Gorraiz , J., Ulrych, U., Glänzel, W., Arroyo-Machado, W., Torres-Salinas, D., (2021). Measuring the excellence contribution at the journal level: an alternative to Garfield’s impact factor. Scientometrics. 127 (12) https://doi.org/10.1007/s11192-022-04295-9

Guerrero-Bote, V.P., Moed, H.F., & Moya-Anegon, F. (2021). New indicators of the technological impact of scientific production. Journal of Data and Information Science, 6(4). https://doi.org/10.2478/jdis-2021-00

Guerrero-Bote, Vicente P.; Sánchez-Jiménez, Rodrigo; Moya-Anegón, Félix (2019). “The citation from patents to scientific output revisited: A new approach to the matching Patstat / Scopus”. El profesional de la información, v. 28, n. 4, e280401. https://doi.org/10.3145/epi.2019.jul.01

Guerrero-Bote, Vicente P.; Olmeda-Gómez, Carlos; Moya-Anegón, Félix (2013). “Quantifying the benefits of international scientific collaboration”. Journal of the American Society for Information Science and Technology, v. 64, n. 2, pp. 392-404. https://doi.org/10.1002/asi.22754

Guerrero-Bote, Vicente P.; Moya-Anegón, Félix (2012). “A further step forward in measuring journals’ scientific prestige: The SJR2 indicator”. Journal of informetrics, v. 6, n. 4, pp. 674-688. https://doi.org/10.1016/j.joi.2012.07.001

Lancho-Barrantes, Bárbara S.; Guerrero-Bote, Vicente P.; Moya-Anegón, Félix (2013). “Citation increments between collaborating countries”. Scientometrics, v. 94, n. 3, pp. 817-831. https://doi.org/10.1007/s11192-012-0797-3

Miguel, Sandra; Chinchilla-Rodríguez, Zaida; Moya-Anegón, Félix (2011). “Open Access and Scopus: A new approach to scientific visibility from the standpoint of access”. Journal of the American Society for Information Science and Technology, v. 62, n. 6, pp. 1130-1145. https://doi.org/10.1002/asi.21532

Mingers, John. Leydesdorff, Loed. (2015). A review of theory and practice in scientometrics. European Journal of Operational Research. 246 (1) 1-19. https://doi.org/10.1016/j.ejor.2015.04.002

Moed, Henk F. (2015). Multidimensional assessment of scholarly research impact. Journal of the Association for Information Science and Technology, v. 66, pp. 1988. https://doi.org/10.1002/asi.23314

Moed, Henk F. (2009). New developments in the use of citation analysis in research evaluation. Archivum immunologiae et therapiae experimentalis, v. 57, pp. 13. https://doi.org/10.1007/s00005-009-0001-5

Moed, Henk F. (2005). Citation analysis in research evaluation. Springer Netherlands. ISBN: 978 14020 3714 6

Moya-Anegón, Félix; Chinchilla-Rodríguez, Zaida (2015). “Impacto tecnológico de la investigación universitaria iberoamericana”. En: Barro, Senén (coord.). La transferencia de I+D, la innovación y el emprendimiento en las universidades. Educación superior en Iberoamérica. Informe 2015. ISBN: 978 956 7106 63 9. http://hdl.handle.net/10261/115266

Moya-Anegón, Félix; Guerrero-Bote, Vicente P.; Bornmann, Lutz; Moed, Henk F. (2013). “The research guarantors of scientific papers and the output counting: a promising new approach”. Scientometrics, v. 97, n. 2, pp. 421-434. https://doi.org/10.1007/s11192-013-1046-0

Moya-Anegón, Félix (2012). “Liderazgo y excelencia de la ciencia española”. El profesional de la información, v. 21, n. 2, pp. 125-128. https://doi.org/10.3145/epi.2012.mar.01

OCDE; SCImago Research Group (2015). Scientometrics - OCDE. Obtenido de Compendium of Bibliometric Science Indicators 2014: http://oe.cd/scientometrics

Rehn, Catharina; Kronman, Ulf (2008). “Bibliometrics”. Karolinska Institutet Universitetbiblioteket. https://kib.ki.se/en/publish-analyse/bibliometrics

Romo-Fernández, Luz M.; López-Pujalte, Cristina; Guerrero-Bote, Vicente P.; Moya-Anegón, Félix (2011). “Analysis of Europe’s scientific production on renewable energies”. Renewable energy, v. 36, n. 9, pp. 2529-2537. https://doi.org/10.1016/j.renene.2011.02.001

SEGIB (2023). Secretaría General Iberoamericana. Quienes somos. Disponible en: https://www.segib.org/quienes-somos/

Van-Raan, Anthony F. J. (2004). “Measuring science”. En: Moed, Henk F.; Glänzel, Wolfgang; Schmoch, Ulrich. Handbook of quantitative science and technology research. Dordrecht: Springer. ISBN: 978 1402027024

Waltman, Ludo (2016). “A review of the literature on citation impact indicators”. Journal of informetrics, v. 10, n. 2, pp. 365-391. https://doi.org/10.1016/j.joi.2016.02.007

Waltman, Ludo; Van-Eck, Nees-Jan; Van-Leeuwen, Thed N.; Visser, Martjin S.; Van-Raan, Anthony F. J. (2011). “On the correlation between bibliometric indicators and peer review: Reply to Opthof and Leydesdorff”. Scientometrics, v. 88, n. 3, pp. 1017-1022. https://doi.org/10.1007/s11192-011-0425-7

Wilsdon, James; Allen, Liz; Belfiore, Eleonora; Campbell, Philip; Curry, Stephen; Hill, Steven; Jones, Richard; Kain, Roger; Kerridge, Simon; Thelwall, Mike; Tinkler, Jane; Viney, Ian; Wouters, Paul; Hill, Jude; Johnson, Ben (2015). The Metric Tide: Report of the independent review of the role of metrics in research assessment and management. ISBN: 1902369273. https://doi.org/10.13140/RG.2.1.4929.1363

- Editions published from 2017 onwards can be consulted at http://www.profesionaldelainformacion.com/informes_scimago_epi.html ↑

- For the purposes of the SCImago Iber, the 22 States that make up the Ibero-American General Secretariat are considered Ibero-American countries (see https://www.segib.org/quienes-somos/ ) ↑

- https://kib.ki.se/en/publish-analyse/bibliometrics#header-6 ↑

- www.epo.org ↑

- https://plumanalytics.com ↑

- https://www.mendeley.com ↑